Spark for Genomic Data

We have been researching the use of Spark and/or Shark as a backend for our visualisation projects. If you are not familiar with Spark, please take a look here. In short: Spark is a platform for distributed handling of data. Think of Hadoop but allowing for interactive rather than batch use. One of the first things we considered is whether to start of using Spark or rather Shark, which is based on it but offers a SQL like syntax instead of a Scala/Python/Java API. But first a word about the data and about the use of Spark to handle the data.

Data

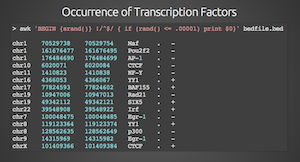

We use a BED file as input format and took this one to start with. It contains information on transcription factors and where they bind on the genome. This input format is a text file and can easily be parsed.

Parsing the data

We started by exploring the Scala interface to Spark, first from the REPL which allows for interactive exploring of the API.

The main benefits of using the Scala interface are the following:

- No additional dependencies required

- All flexibility of Scala as a powerful language, byte-compatible with Java

Reading in the data and parsing it can easily be done:

val bed = sc.textFile("201101_encode_motifs_in_tf_peaks.bed")

val bedArray = bed.map(_.split("\\s+"))This does not do anything yet. The method collect() gets the data out of the RDD structure and return an Array. The first element from the dataset can be selected using:

>scala> bedArray take 1

...

res1: Array[Array[java.lang.String]] = Array(Array(chr1, 29386, 29397, Ets, ., -))Please note that by default RDDs contains Array[Array[String]] which makes it hard to extract the numbers and work with them. In order to convert one line of our data to a quadruple, we define the following function and add it as a transformation:

def extractFeatures(line: Array[String]): (String, Int, Int, String) = {

(line(0).toString, line(1).toInt, line(2).toInt, line(3).toString)

}

val bedArray = bed.map(_.split("\\s+")).map(x => extractFeatures(x))So that we can easily filter:

val result = bedArray filter(x => (x._1 == "chr4" && x._3 > 190930000 && x._2 < 190940000))It would also be possible to define a class that mirrors the data and read the data into an object of that class for easier access and development.

A very important aspect of Spark is the possibility to cache intermediate steps in order to retrieve them faster:

val cached = bedArray.cache()

val result = cached filter(x => (x._1 == "chr4" && x._3 > 190930000 && x._2 < 190940000))Discussion

The Spark framework does what it needs to do: it abstracts away everything that has to do with running code in parallel on a cluster by providing a functional API in Scala (as well as Python and Java).

In a later post, we will take a look at Shark and see how it compares to Spark and which one may be more applicable to our use-case.

Learning resources

Please refer to this pages for more information about Spark: